These seem to get longer and longer. A whole pile of links for you.

Management and Organisational Behaviour

How Serving Is Your Leadership? - Who is working for who here?

Be a Manager - “The only reason there’s so many awful managers is that good people like you refuse to do the job.”

I’m the Boss! Why Should I Care If You Like Me? - Because your team will be more productive… Here are some pointers.

Software Development

Technical debt 101 - Do you think you know what technical debt is and how to tackle it? Even so I’m sure this article has more you can discover and learn. A must read.

Heisenberg Developers - So true. In fact this hits a little close to home since we use JIRA, the bug tracking tool mentioned in the article.

What is Defensive Coding? - Many think that defensive coding is just making sure you handle errors correctly but that is a small part of the process.

Need to Learn More about the Work You’re Doing? Spike It! - So you are an agile shop, your boss is demanding some story estimates and you have no idea how complex the piece of work is because it’s completely new. What do you do?

Software Development with Feature Toggles - Don’t branch, toggle instead.

Agile practices roundup - here are a number of articles I’ve found useful recently:

- 4 Reasons to Include Developers in Story Writing

- 2 Times to Play Planning Poker and 1 Time Not To

- Benefits of Pair Programming Scheduling Pairing Sessions Inside a Pairing Session

How to review a merge commit- Phil dives into the misunderstood world of merge commits and reviews. Also see this list of things to look out for during code reviews.

Functional Programming

Don’t Be Scared Of Functional Programming - A good introduction to functional programming concepts using JavaScript as the demonstration language.

Seamlessly integrating T-SQL and F# in the same code - The latest version of FSharp.Data allows you to write syntax checked SQL directly in your F# source and it executes as fast as Dapper.

Railway Oriented Programming - This is a functional technique but I’ve recently been using it in C# when I needed to process many items in a sequence, any of which could fail and I want to collect all the errors up for reporting back to ops. It is harder to do in C# since there are no discriminated unions but a custom wrapper class is enough.

Erlang and code style - A different language this time, Erlang. How easy is programming when you don’t have to code defensively and crashing is the preferred way of handling errors.

Twenty six low-risk ways to use F# at work - Some great ways to get into F# programming without risking your current project.

A proposal for a new C# syntax - A lovely way to look at writing C# using a familiar but lighter weight syntax. C#6 have some of these features planned but this goes further. Do check out the link at the end of the final proposal.

Excel-DNA: Three Stories - Integrating F# into Excel - a data analysts dream…

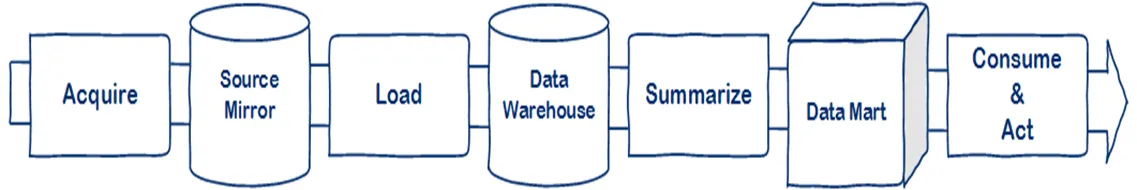

Data Warehousing

Signs your Data Warehouse is Heading for the Boneyard - Some interesting things to look out for if you hold the purse strings to a data warehouse project. How many have you seen before?

The 3 Big Lies of Data - I’ve heard these three lies over and over from business users and technology vendors alike. Who is kidding who?

Six things I wish we had known about scaling - Not specifically about data warehouses but these are all issues we see on a regular basis.

Why Hadoop Only Solves a Third of the Growing Pains for Big Data - You can’t just go and install a Hadoop cluster. There is more to it than that.

Microsoft Azure Machine Learning - Finally it looks like we can have a simple way of doing cloud scale data mining.

Data Visualization

5 Tips to Good Vizzin’ - So many visualizations break these rules.

Five indicators you aren’t using Tableau to its full potential - I’ve seen a few of these recently - tables anyone?

Create a default Tableau Template - Should save some time when you have a pile of dashboards to create.

Building a Tableau Center of Excellence - It is so easy to misunderstand Tableau which is not helped by a very effective sales team. This article has some great advice for introducing Tableau into your organisation.

Beginner’s guide to R: Painless data visualization - Some simple R data visualization tips.

Visualizing Data with D3 - If you need complete control over your visualization then D3 is just what you need. It can be pretty low-level but its easy to produce some amazing stuff with a bit of JavaScript programming.

Testing

I Don’t Have Time for Unit Testing - I’ve recently been guilt of this myself so I like to keep a reminder around - you will go faster if you write tests.

Property Based Testing with FsCheck - FsCheck is a fantastic tool primarily used in testing F# code but there is no reason it can’t be used with C# too. It generates automated test cases to explore test boundaries. I love the concise nature of F# test code too especially with proper sentences for test names.

Analysis Services

I’ve collected a lot of useful links for Analysis Services, both tabular and multidimensional:

DAX Patterns website - This website is my go-to resource for writing DAX calculations. These two are particularly useful:

Using Tabular Models in a Large-scale Commercial Solution - Experiences of SSAS tabular in a large solution. Some tips, tricks and things to avoid.

Also:

- Experiences & Tabular Tips

- Row and Column (Cell) based security in SSAS Tabular Model

- How connections will hurt your Tabular Workload

- Listing Active Queries with PowerShell

- How to build your own SSAS Resource Governor with PowerShell

- 5 Tools for Understanding BISM Storage

- How to Automate SSAS Cube Partitioning in SSIS

- How to turn off/on bitmap indexes in SSAS

- Context Aware And Customised Drillthrough

Discuss this on Twitter or LinkedIn